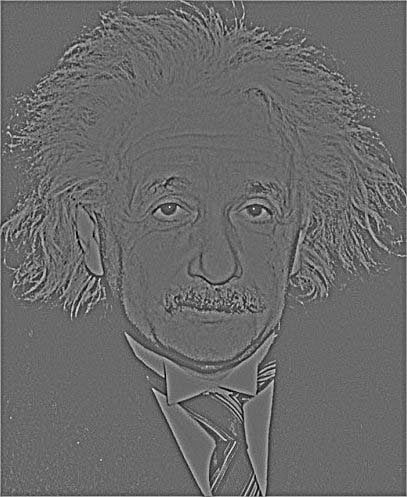

The recent Mirror newspaper advert in the UK has brought a classic optical illusion back into the public eye; a hybrid image of Marilyn Monroe and Albert Einstein which, up close, looks like Einstein but from further away, or with squinted eyes, looks like Marilyn.

The trick is then to to use a Fourier bandpass filter to filter out low frequency structure in the Einstein image, and filter out high frequency structure in the Marylin image. You can find Fourier bandpass filters (sometimes called FFT filters) for many image editing programs.

So what is a Fourier bandpass filter? Without diving into too much maths it is a way of separating out information based on its wavelength. Filtering out low frequency structure in an image leaves only the short wavelength features, i.e. fine lines and sharp edges, while filtering out high frequency structure leaves only the long wavelengths, i.e. the general brightness of different parts of the image.

Try it out! From close up Einstein's trademark hair and moustache jump out, but squint or stand back from the screen and you can see a classic shot of Marylin's curls, eyelashes and smile. A version of this illusion was first made by Aude Oliva for a feature in New Scientist, and it is a really striking example of a hybrid image illusion.

So what is your brain doing? And how can you make an image like this? Making an image is actually quite simple. First of all take pictures of these two pop icons with similar(ish) lighting and align them so their main features (eyes, mouth, overall face) are at the same size and position in the images:

The trick is then to to use a Fourier bandpass filter to filter out low frequency structure in the Einstein image, and filter out high frequency structure in the Marylin image. You can find Fourier bandpass filters (sometimes called FFT filters) for many image editing programs.

So what is a Fourier bandpass filter? Without diving into too much maths it is a way of separating out information based on its wavelength. Filtering out low frequency structure in an image leaves only the short wavelength features, i.e. fine lines and sharp edges, while filtering out high frequency structure leaves only the long wavelengths, i.e. the general brightness of different parts of the image.

Einstein with a <5px bandpass="" filter="" fourier="" p="" wavelength="">

Marylin with a >10px wavelength Fourier bandpass filter5px>

Fourier bandpass filters are easier to intuitively understand with sound rather than an image. It might help to imaging using a Fourier bandpass filter on some music; a low frequency (long wavelength) bandpass filter would leave only the bassline and bass drum, while a high frequency (short wavelength) bandpass filter would leave vocal lines and high pitched instruments and drums.

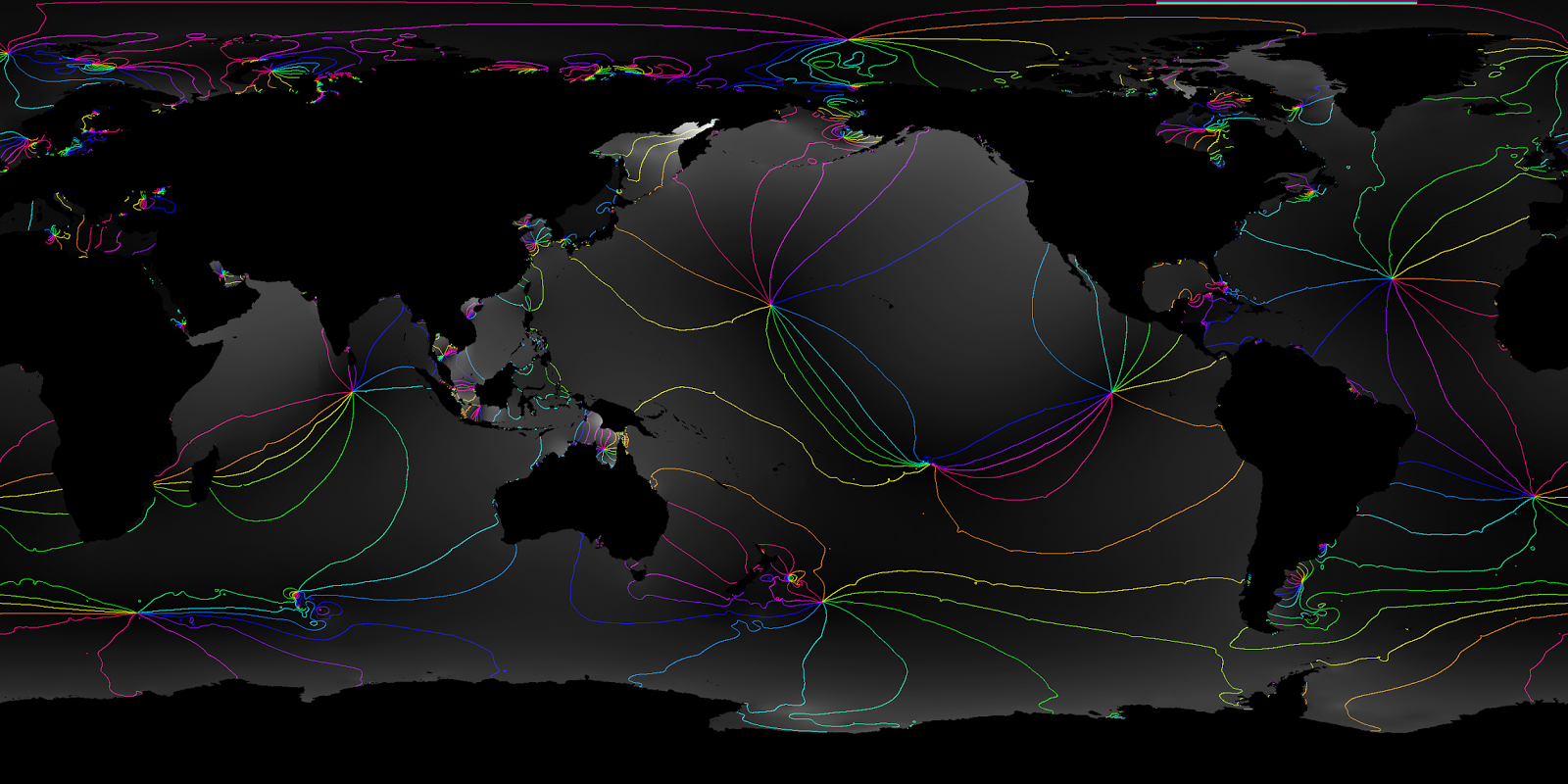

If you are more mathematically minded it might be useful to imagine this through some graphs. These are plots how bright the image is as you go along a line across the middle of the two images. It is easy to see that the filtering of the Einstein image only leaves the short wavelength data, and the filtering of the Marylin image only leaves the long wavelength data:

You can also imagine a long wavelength Fourier bandpass filter as a blurring, and a short wavelength Fourier bandpass filter as the inverse of blurring; grabbing the details that are lost when the image is blurred.

Having made the two Fourier bandpass images it is simply a matter of averaging the two together to get the final product:

So how does it work? The trick is simply based on a limitation of how well you can see. From a greater distance your eyes are less able to see the fine detail of the image, so your brain interprets only the big structures. In this case this leaves your brain to latch onto the Marylin part of the image, helped by the fact that many of her photos are extremely recognisable.

From closer in your eyes can now resolve the fine detail in the image, and your brain does its best effort at interpreting a slightly messy image. Because both the photo of Einstein and Marylin are kind of similar (light skin on a dark background, with big hair) your brain can do a decent job of merging the fine detail of Einstein's face onto the general light and shadow of Marylin's face.

By switching the filtering of the two images you can get the reverse effect...

... although I do find Marylin's teeth in this photo quite terrifying!

Software used: